We all know the power of Large Language Models (LLMs). They can summarize, analyze, and generate text with impressive fluency. But what happens when you feed them complex documents like PDFs or PowerPoints? Often, the crucial information embedded in the layout and formatting gets lost in translation. A heading loses its significance, a table becomes a jumbled mess of text, and the LLM struggles to truly understand the content.

Lost context leads to limited understanding and LLM hallucinations

Think about it. The way a document is structured provides vital context. A heading clearly signals a new topic, bullet points highlight key takeaways, and tables organize data into meaningful relationships. When an LLM only sees a flat text conversion, it misses these vital cues. And guess what - LLMs actually make things up! Yes, it’s true. If the LLM can’t find what it needs, it will give wrong answers, sometimes even altering accurate information. This is what the industry is calling “hallucinations” - when LLMs invent the wrong answer. When LLMs have limited context, this can also lead to:

- Inaccurate Information Extraction: Trying to pull specific data from a complex table in plain text? Good luck! The LLM might struggle to identify the correct rows and columns.

- Hallucinations: When an LLM lacks a solid structural understanding, it's more prone to generating inaccurate information, misinterpreting or rewriting the content.

- Lack of Deep linking capabilities: Without information about a document structure, it is simply impossible to get deep linking information, such as page numbers, exact paragraph location or table row, from the source content in the LLM response. And that sets you up fro really difficult document analysis, processes, and any other downstream activities you need to do with these documents. Trying to amend a contract but you don't have the correct placement of the clause? Yikes! And, ultimately, this impairs the ability to create create user experiences when dealing with complex, unstructured content.

Vertesia’s Semantic DocPrep is the answer

Introducing Vertesia’s Semantic DocPrep, a generative AI powered service designed to improve complex document processing with LLMs. Vertesia intelligently transforms your documents, starting with PDFs, into structured XML files, creating a rich, semantically aware representation which offers the following benefits over other text formats:

- LLM models better understand complex documents and maintain focus on complex structures such as long tables without getting lost. Which means you have accurate data that you can then perform analysis on, search into, and enhance business processes.

- We aren’t asking LLMs to return the content of the documents, instead assigning each element of the content an ID, so that LLMs never have the opportunity to rewrite the content = no hallucinations.

Vertesia's Semantic DocPrep directly addresses the limitations of processing plain text, paving the way for more accurate, reliable, and insightful interaction and automation with your documents.

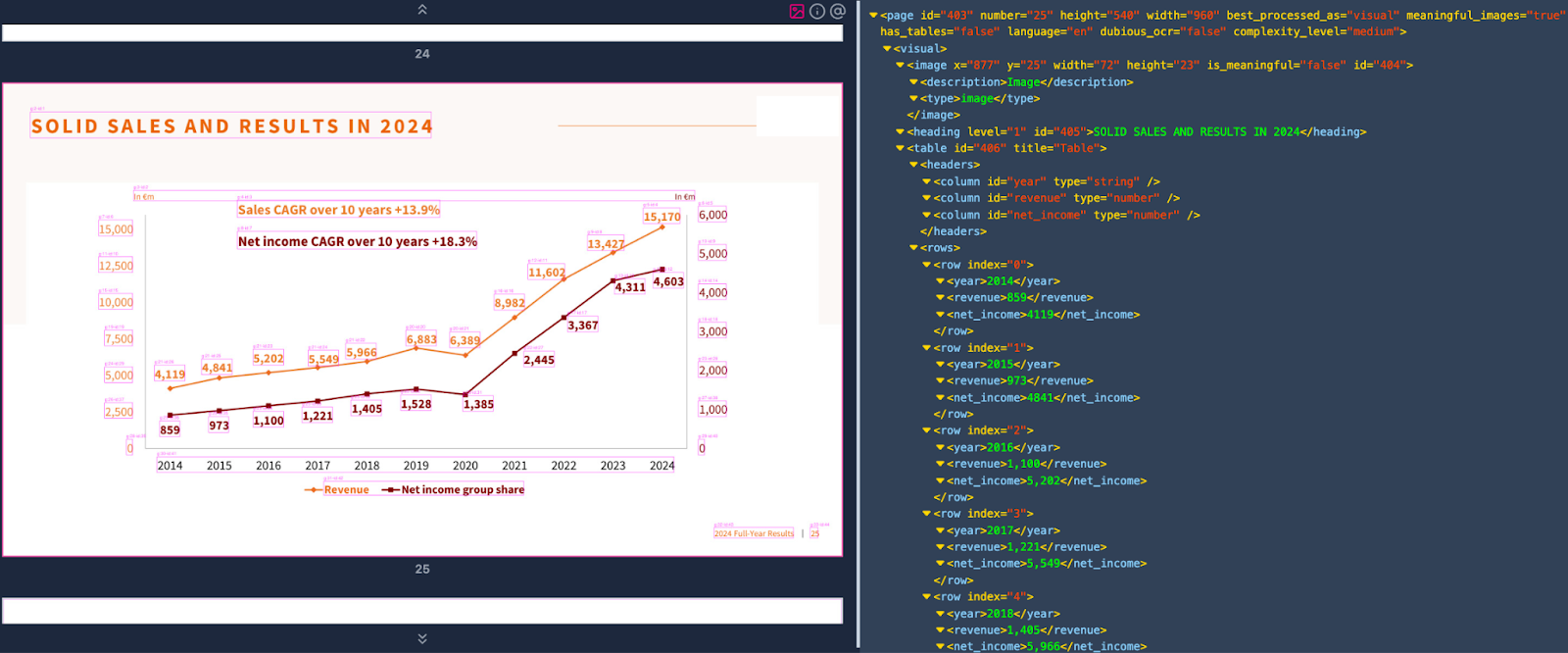

Graph converted to XML using Semantic DocPrep

So, how do we do it?

- Semantically deconstruct the source document**

- Parse different elements to different models for processing

- Reassemble the XML data representation of the document (the semantic layer)

- No rewriting = no hallucinations!

**A note on semantic deconstruction

We break down the source document into understandable “chunks”, and preserve the semantic context. With other tools, the chunking strategy is naive and based on character count. This is another problem that leads to hallucinations because the chunks can lose their meaning when sentences become fragmented thoughts and related paragraphs become separated. Vertesia uses LLMs to semantically chunk content into smaller parts based on human language and the actual meaning of content, so the chunks always retain their intended meaning.

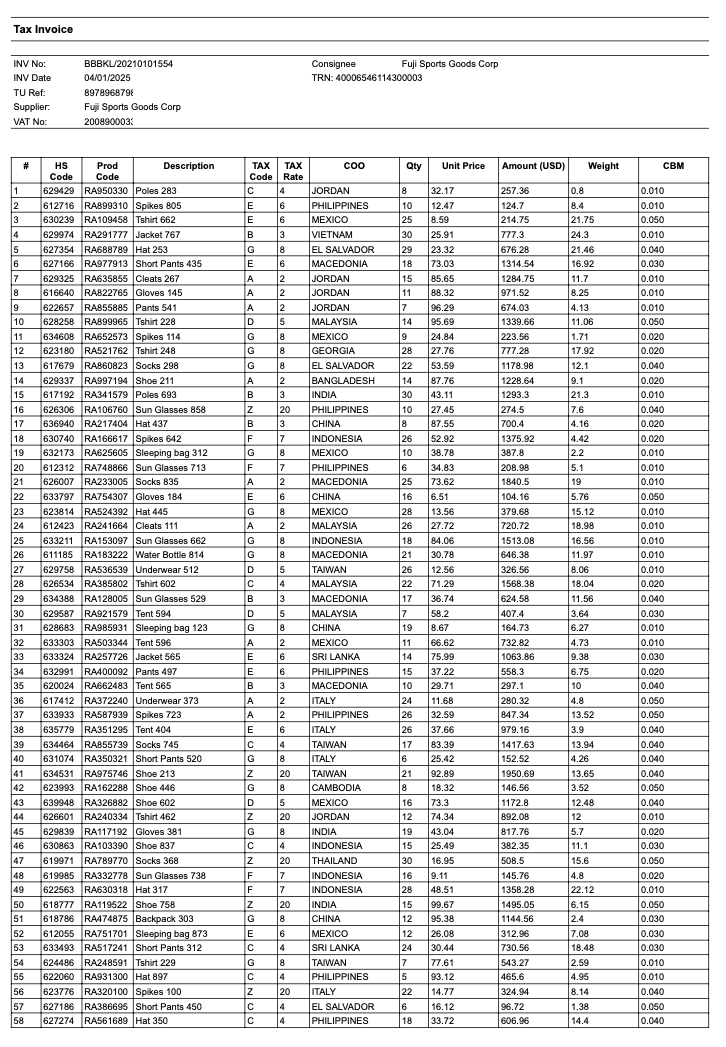

Vertesia’s Semantic DocPrep in action: Extracting line items from an invoice

To illustrate the capabilities of Semantic DocPrep, let’s use a realistic and simple example.

As a global company, we receive a lot of commercial invoices from our global suppliers, in completely random formats which may also include other information such as a packaging list. For tax and tariffs purposes, we need to accurately extract all the line items in a consistent format.

We tried the simple approach of feeding an LLM model with the plain OCR text of our invoices, but the accuracy is nowhere near where it needs to be able to confidently automate the process, and only gets worse as the number of line items increases.

Example of the first page of an invoice

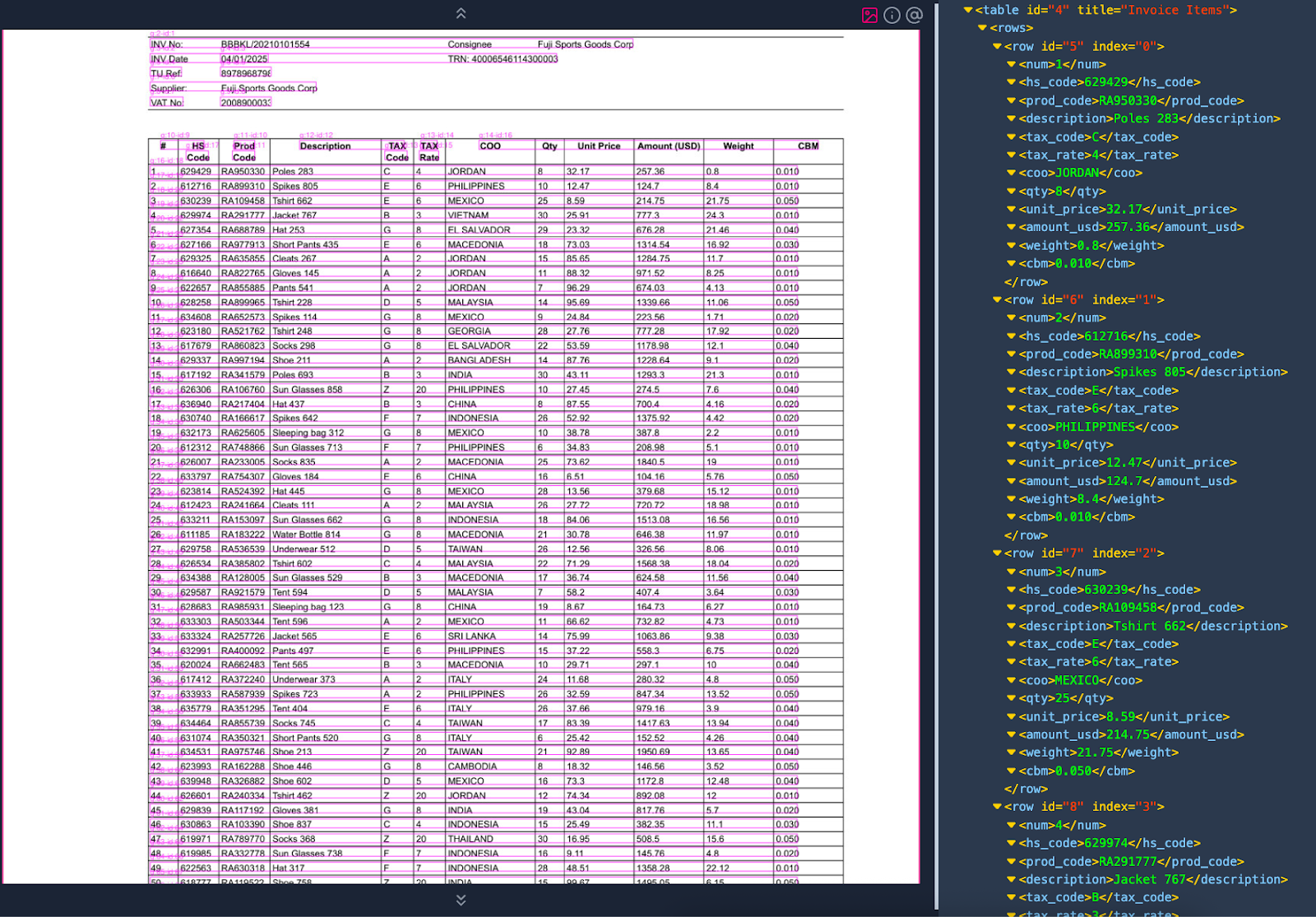

Using Vertesia’s Semantic DocPrep, the first is to upload the file and trigger a transformation to XML

Invoice transformed into XML

As shown in the screenshot, Vertesia intelligently maps the table to an XML structure. One key aspect is that the transformation does not rewrite text using LLM. Thus the text in the XML file is 100% accurate when compared to the source.

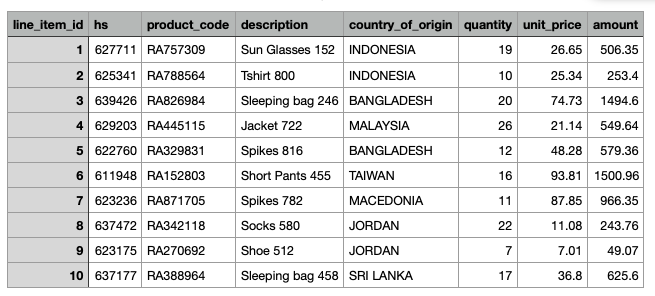

Next we want to extract the line items in our own format that can be fed to our downstream applications using a consistent format.

This use case is natively supported by Vertesia and the execution is made as simple as an API call. The service will automatically identify the relevant tables in the document, and map the columns to the requested format, a CSV file for example. A complete code sample for this example can be found in our github repository.

The first few line items mapped to the requested columns

You can start using Vertesia’s Semantic DocPrep by signing up for an account and learn more about it looking at the documentation.