For decades, Digital Asset Management (DAM) platforms have helped bring order to creative chaos—cataloging images, videos, and brand assets across industries like fashion, pharmaceuticals, and media. They’ve given organizations a central hub to store, search, and secure their content at scale. But one major flaw has held them back: the asset-tagging problem.

Metadata is what makes DAM work. But tagging assets—correctly, consistently, and completely—is tedious, error-prone, and expensive. Creative teams hate doing it. And most organizations don’t have the luxury of hiring a full-time staff of digital librarians to handle the workload.

Enter Vertesia.

Plain and simple, Vertesia is a platform purpose-built to deliver generative AI (GenAI) services at scale. One of those core services is intelligent metadata generation—designed to solve the most persistent challenge in DAM. We have GenAI agents within our platform that can act like an army of tireless, invisible librarians—tagging assets at scale, applying consistent metadata across formats, and eliminating one of the biggest friction points in asset workflows.

Metadata: The Key to Unlocking Content for RAG

Marketers and creative teams have come to know: GenAI is only as good as the data you feed it. And most of what’s stored in DAM platforms—images, videos, PDFs, and design files—is unstructured by nature. Without accurate classification, tagging, and transcription, these assets become invisible to AI. Metadata is what makes rich media content searchable and usable for large language models (LLMs).

The quality of your metadata can make or break how useful AI becomes in your DAM. But here’s the catch: most DAM systems rely on basic, generic tagging powered by a single, traditional LLM. That might get you broad terms like "woman," "beach," or "car"—but not "spring campaign hero shot," "2022 packaging mockup," or "FDA-approved usage image for oncology." Without structured business metadata, even the most powerful search tools return weak results or hallucinations.

Vertesia takes a different approach.

Our platform doesn’t just label what’s in the image—it understands what the image is. A product shot isn’t the same as a concept sketch. A training video isn’t interchangeable with a final commercial. Our AI agents automatically classify assets by type and apply rich, structured metadata aligned to your organization’s unique taxonomy and use cases—or even suggest one based on the asset’s type. It’s a semantic layer built for business, not a one-size-fits-all labeler.

And we don’t stop at visuals. We generate embeddings on full text, properties, and image content—creating a complete arsenal for Retrieval-Augmented Generation (RAG) workflows. That means faster, more accurate asset discovery, more contextually relevant GenAI outputs, and a DAM system that finally lives up to its promise.

Vertesia also integrates with top-tier language models, including multimodal models capable of processing both vision and text. These models recognize and tag objects, concepts, people, and locations—then automatically generate descriptions aligned to your organization’s content guidelines. This ensures every asset is not only accurately labeled but also summarized in a consistent way, driving metadata quality and standardization across your DAM.

Here’s what RAG looks like in the real world:

A global retail brand wanted to improve how it cataloged apparel assets for its creative and sales teams. Take one example: a designer uploads a low-res art file featuring characters from a popular cartoon show. The sales team later wants to search for shirts featuring specific characters, but without precise metadata, those assets are essentially invisible.

With Vertesia, the brand can automatically populate assets with the correct character names, internal product codes, and descriptive tags—down to the asset type, color, and style.

Now anyone in the organization can run semantic searches and get accurate results instantly. Even better, they can browse similar assets for creative inspiration—because everything is searchable, structured, and aligned with the business context.

This is just one example of how Vertesia turns fragmented, inconsistent asset libraries into AI-ready content ecosystems—starting with metadata and scaling into search, enrichment, and generative reuse.

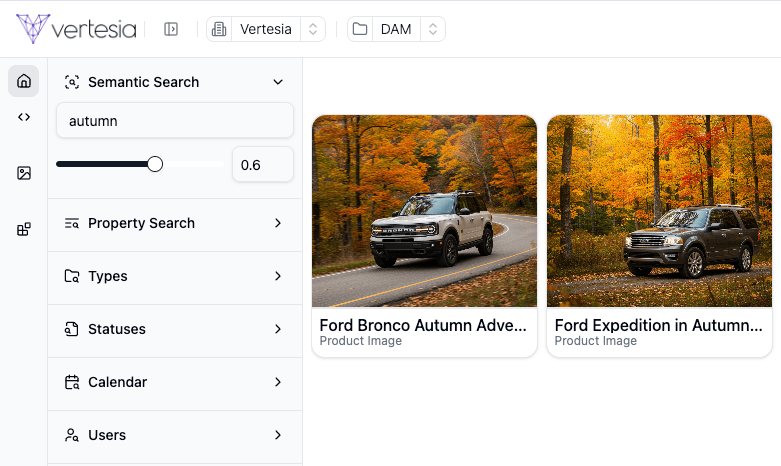

Search, your way: Semantic, graph, and everything in between

There’s a lot of debate out there about the “right” kind of search—semantic search, graph search, keyword search. The truth? It depends on the use case. Different tasks demand different tools. That’s why Vertesia doesn’t lock teams into a single method. Instead, we prepare content in every possible way—so it’s ready for whatever kind of search your business needs, now and in the future.

Because the use case often isn’t known at the time of content ingestion, Vertesia’s platform takes a flexible, multi-layered approach. Our pluggable API architecture enables connections to leading transcription engines like Amazon Transcribe, OpenAI Whisper, and Gladia, converting audio and video files into fully formed transcripts.

Once transcribed, the text is automatically chunked based on semantic meaning, and embeddings are generated for similarity search. The resulting text objects are also enriched with metadata, making them ready for downstream systems to leverage in a variety of workflows. As part of the enrichment process, Vertesia can use LLMs to identify the spoken language and generate multilingual versions of both the original content and its enriched metadata—making that speech fully searchable through:

- Traditional structured search, via metadata and full-text queries

- Semantic search, using vector-based similarity and NLP techniques

- Multilingual queries, with native-language results

- Graph or contextual search, depending on enterprise-specific models

Whether you're pulling insights from a podcast, surfacing training clips across languages, or matching video transcripts to campaign themes, Vertesia ensures every asset is enriched, indexed, and ready to be found—no matter how you’re searching.

DAM has always been essential, but to unlock its next stage of value, it needs more than storage and access—it needs intelligence. With Vertesia, metadata becomes a strategic asset. Assets are enriched at the point of intake, structured for search, and ready for GenAI from day one.

For marketing, creative, and IT leaders alike, this isn’t just a technical upgrade. It’s a way to reduce manual overhead, increase asset ROI, and future-proof content operations for the AI-enabled enterprise.